Among those, we see that users struggle with getting started with automations and understand how their programs are performing.

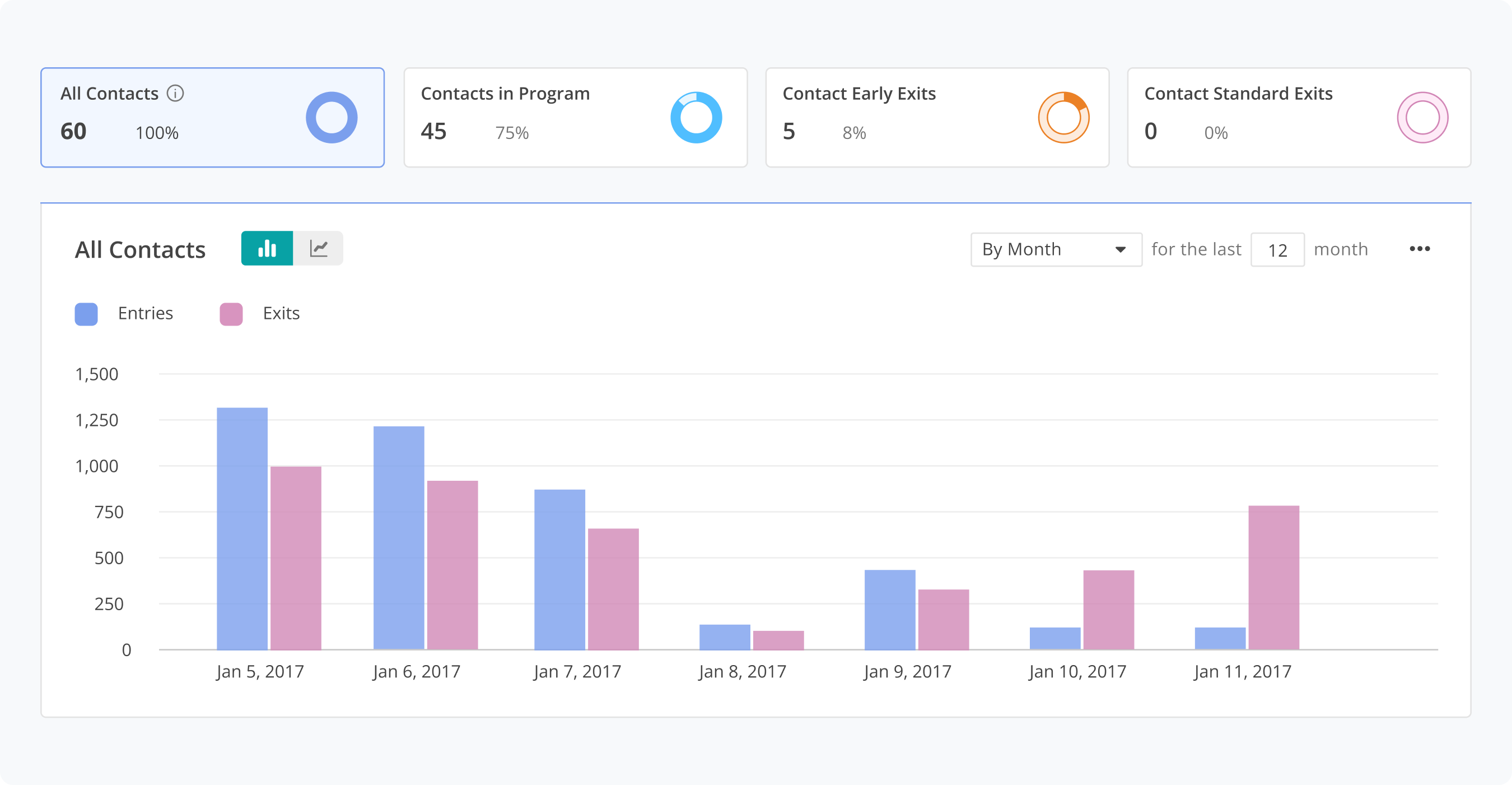

The adoption and engagement rates for the Automation Dashboard have remained consistently high since the product's initial launch. We've witnessed a significant enhancement in customer satisfaction rates. However, there are still a few outstanding customer requests that require attention and resolution.

We talked to our internal stakeholders including the Custom Success, Tech Support, and Marketing teams to understand their pain points.

We consolidated what we learned from these interviews and narrowed down the features for a user-friendly Automation dashboard.

The team designed mid-fi prototypes and prepared for the first round of usability testing.

The team tested the mid-fi prototype with users to validate some of our design assumptions. We then refined some of our design decisions with a hi-fidelity prototype and tested it again.

The team is actively gathering feedback from customer calls and testing sessions to refine and enhance the feature. The iterative process allowed us to continuously improve the product with timely customer feedback.